Category: Blog

-

Amazons journey from an online book retailer to cloud computing giant

On August 3rd, Amazon will share its earnings report, with a significant portion attributed to its cloud division. If you’re curious about Amazon’s journey from an online book retailer to a cloud computing powerhouse, here is a short overview based on my book, “Cloud Computing Basics” The story of Amazon is the story of its…

-

No closer to intelligent systems

Much attention has been given lately to the success of Artificial Intelligence. The abilities of ChatGPT 3 and Dall E-2 are impressive. Apart from the fact that they sound like droids from Starwars, there is not much to suggest that they are the harbinger of any fundamental advance towards creating a general artificial intelligence. We…

-

The prospects of circular IT development

Iterative or agile development in one flavor or other has become the standard for IT development today. It is in many contexts an improvement on plan based or waterfall development, but it inherits some of the same basic weaknesses. Like plan-based development it is based on decomposing work into atomic units of tasks with the…

-

Feature creep is a function of human nature – how to combat it

When we develop tech products, we are always interested in how to improve them. We listen to customers’ requests based on what they need, and we come up with ingenious new features we are sure that they forgot to request. Either way product development inevitably becomes an exercise in what features we can add in…

-

Move Fast and Do No Harm

The advent of SARS-COV-2 has mobilized many tech people with ample resources and a wealth of ideas. A health crisis like this virus calls for all the help we can get. However, the culture of the tech sector exemplified with the phrase “Move fast and break things” is orthogonal to that of medicine exemplified with…

-

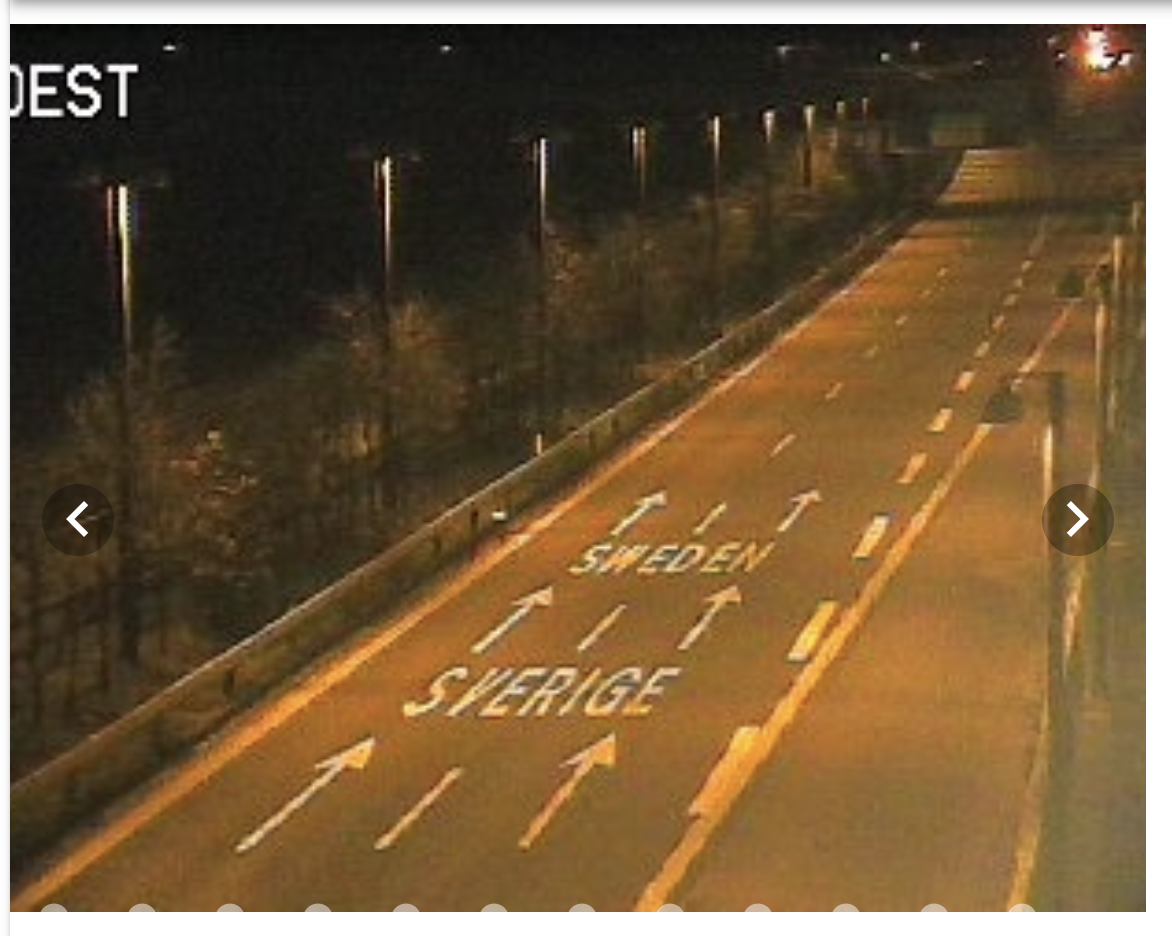

How truck traffic data may detect the bottom of the the current market

It seems evident we are on our way to a recession . This will prove a challenge for many, and our world economies will suffer. Not least the stock market. We are similarly probably headed for a bear market. But the stock market is ahead of the curve and typically turns around about 5 months before…

-

Using traffic data to understand the impact of COVID -19 measures

We at Sensorsix have built a tool for ambient intelligence. Ambient intelligence is knowledge about what goes on around us. In our case it is built on what we can learn about human mobility from sensors. We have been in stealth until now working on a prototype to quantify the flow of human movement in…

-

Why Your Organization Most Likely Shouldn’t Adopt AI Anytime Soon

Recently I attended the TechBBQ conference. Having been part of the organizing team for the very first one, I was impressed to see what it had developed into. When I came to get my badge the energetic and enthusiastic volunteer asked me if I was “pumped”, but I was not pumped (as far as…

-

AI, Traffic Tickets and Stochastic Liberty

Recently I received a notification from Green Mobility the electric car ride-share company I am using some times. I have decided not to own a car any longer and experiment with other mobility options, not that I care about the climate, it’s just, well, because. I like these cars a lot, I like their speed…